I have already wrote about how I feel about Apple Intelligence and what makes is unique amongst all the Artificial Intelligence models/tech out there. There are however a few things I know Apple could have done better:

1. The image generation capabilities.

When Apple showed off the image generation capabilities on Apple Intelligence my first thought was that it looked like something from Midjourney back in 2022. It didn’t have the panache that you would expect from Apple.

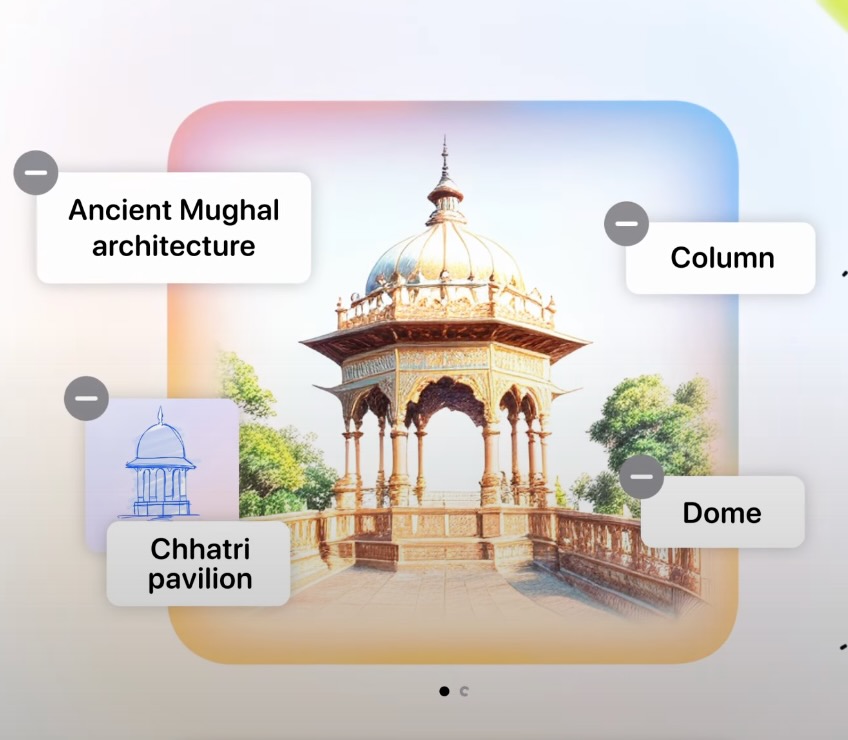

Apple Intelligence can generate images in three different styles : sketch, illustration, and animation. Photorealistic images cannot be created as it could lead to misuse on deepfakes, which unarguably is the right move. But these three different styles do not simply look good. They don’t have the Apple magic that you usually would expect from the company.

Genmoji is where you can create any emoji as you like, which I think actually looks good, but creating images is a different story. During the keynote we saw a drawn image of architecture in India that Apple Intelligence would “transform” into an AI generated image, look at the images below and tell me which looks unique? If I was you I’d stick to the one I drew. Yes, not everyone is artistically gifted, but the AI generated one at the bottom looks bland and uninspiring.

I hope this is just a first take from Apple and during the course of the next few years these generated images get better and not look like something that came out of Midjourney a couple of years ago.

2. Applebot Web Scraping

Apple has taken a privacy first approach to personal data which is great, but Apple has also scraped publicly available information to train their AI models. This means that Apple had already started training their models on public websites. Apple introduced Apple Intelligence during WWDC 2024 but this scraping had already began much earlier than WWDC, meaning your and my website (and data) might have already been scraped.

From Apple’s Machine Learning Research blog:

“We train our foundation models on licensed data, including data selected to enhance specific features, as well as publicly available data collected by our web-crawler, AppleBot. Web publishers have the option to opt out of the use of their web content for Apple Intelligence training with a data usage control.”

The point to note is that web publishers have the option to opt out of this. Meaning if you don’t explicitly tell your website to stop telling Applebot to gather data from your site, it won’t. I personally expected Apple to be fair and not scrape the web for their AI training needs. It could have been something that set Apple further apart in the race to become an Artificial Intelligence powerhouse, but here we are.

By the way, here is how you can configure your website to not let Applebot do its scraping.

3. Device and RAM limitations

Apple Intelligence is only coming to MacBooks, iPad’s and iPhones. The HomePods, Apple TV, Apple Watch, and Vision Pro are notably absent. And not all MacBooks, iPads, and iPhones are getting it either. Only MacBooks and iPads with an M-series processor are getting it and only the iPhone 15 Pro Models are getting it. No, not even the non-Pro iPhone 15’s are getting it.

From what we have heard so far is that it is a RAM limitation not an oomph-limitation on Apple’s class leading chipsets. In other words the A16 Bionic processor on the iPhone 15 is capable enough, but the 6gb of RAM is a limiting factor making the non-Pro iPhones that launched about 8 months not get Apple Intelligence. I can understand the frustration if you had brought an iPhone 15 last year, but the lesson here is not to buy products expecting it to get all future software upgrades. This is not Apple being a cash grab and trying to make you upgrade, it’s simply how much taxing these AI models can be.

But that said, Apple could have been less stingy with their RAM offerings, why do MacBooks still start with 8gb of RAM is something I don’t understand. It is not as if the hardware and software are provided by different companies or vendors. Apple knew and could have put at least 8gb of RAM on the non-Pro iPhones. Remember when iPhones would start with a 16gb storage size for ages that everyone in the industry made fun of? That is this, but for RAM. And now Apple being stingy has made it a negative and frustrating experience for it’s customers.

Even more annoying is that the devices that rely on Siri a lot more – HomePods, Apple TV, Apple Watch, and Vision Pro – do not seem to be getting Apple Intelligence. If Siri is going to be really intelligent on your phone, but on your HomePod if you ask a simple question and are greeted with “Sorry I can’t do that” it is going to get annoying real quick. I hope during the course of the next year Apple Intelligence does come to more devices. If RAM is going to be a factor here, why not do all processing on the Private Cloud Compute? If that is a possibility then why not even provide older iPhones (with limited) Apple Intelligence capabilities. The Apple Vision Pro has an M processor and 16gb of RAM, why is this device not getting Apple Intelligence? I am hoping that this is a case of Apple prioritising their devices as to which ones can get Apple Intelligence first. And during the course of the next few years more devices will get these features.